I’m looking at side-band variants of the lunisolar orbital forcing because that’s where the data is empirically taking us. I had originally proposed solving Laplace’s Tidal Equations (LTE) using a novel analytical derivation published several years ago (see Mathematical Geoenergy, Wiley/AG, 2019). The takeaway from the math results — given that LTEs form the primitive basis of the GCM-specific shallow-water approximation to oceanic fluid dynamics — was that my solution involved a specific type of non-linear modulation or amplification of the input tidal. However, this isn’t the typical diurnal/semi-diurnal tidal forcing, but because of the slower inertial response of the ocean volume, the targeted tidal cycles are the longer period monthly and annual. Moreover, as very few climate scientists are proficient at signal processing and all the details of aliasing and side-bands, this is an aspect that has remained hidden (again thank Richard Lindzen for opening the book on tidal influences and then slamming it shut for decades).

Continue readingClimate

Models and Simulation of the climate

Bay of Fundy subbands

With the recent total solar eclipse, it revived lots of thought of Earth’s ecliptic plane. In terms of forcing, having the Moon temporarily in the ecliptic plane and also blocking the sun is not only a rare and (to some people) an exciting event, it’s also an extreme regime wrt to the Earth as the combined reinforcement is maximized.

In fact this is not just any tidal forcing — rather it’s in the class of tidal forcing that has been overlooked over time in preference to the conventional diurnal tides. As many of those that tracked the eclipse as it traced a path from Texas to Nova Scotia, they may have noted that the moon covers lots of ground in a day. But that’s mainly because of the earth’s rotation. To remove that rotation and isolate the mean orbital path is tricky. And that’s the time-span duration where long-period tidal effects and inertial motion can build up and show extremes in sea-level change. Consider the 4.53 year extreme tidal cycle observed at the Bay of Fundy (see Desplanque et al) located in Nova Scotia. This is predicted if the long-period lunar perigee anomaly (27.554 days and the 8.85 absidal precessional return cycle) amplifies the long period lunar ecliptic nodal cycle, as every 9.3 years the lunar path intersects the ecliptic plane, one ascending and the other descending as the moon’s gravitational pull directly aligns with the sun’s. The predicted frequencies are 1/8.85 ± 2/18.6 = 1/4.53 & 1/182, the latter identified by Keeling in 2000. The other oft-mentioned tidal extreme is at 18.6 years, which is identified as the other long period extreme at the Bay of Fundy by Desplanque, and that was also identified by NASA as an extreme nuisance tide via a press release and a spate of “Moon wobble” news articles 3 years ago.

What I find troubling is that I can’t find a scholarly citation where the 4.53 year extreme tidal cycle is explained in this way. It’s only reported as an empirical observation by Desplanque in several articles studying the Bay of Fundy tides.

Continue readingProof for allowed modes of an ideal QBO

In formal mathematical terms of geometry/topology/homotopy/homology, let’s try proving that a wavenumber=0 cycle of east/west direction inside an equatorial toroidal-shaped waveguide, can only be forced by the Z-component of a (x,y,z) vector where x,y lies in the equatorial plane.

To address this question, let’s dissect the components involved and prove within the constraints of geometry, topology, homotopy, and homology, focusing on valid mathematical principles.

Continue readingAre the QBO disruptions anomalous?

Based on the previous post on applying Dynamic Time Warping as a metric for LTE modeling of oceanic indices, it makes sense to apply the metric to the QBO model of atmospheric winds. A characteristic of QBO data is the sharp transitions of wind reversals. As described previously, DTW allows a fit to adjust the alignment between model and data without incurring a potential over-fitting penalty that a conventional correlation coefficient will often lead to.

Continue readingDynamic Time Warping

Useful to note that the majority of the posts written for this blog are in support of the mathematical analysis formulated in Mathematical Geoenergy (Wiley/AGU, 2018). As both new data becomes available and new techniques for model fitting & parameter estimation — aka inverse modeling (predominantly from the machine learning community) — are suggested, an iterative process of validation, fueled by the latest advancements, ensures that the GeoEnergyMath models remain robust and accurately reflective of the underlying observed behaviors. This of course should be done in conjunction with submitting significant findings to the research literature pipeline. However, as publication is pricey, my goal is to make the cross-validation so obvious that I can get an invitation for a review paper — with submission costs waived. Perhaps this post will be the deal-maker — certainly not the deal-breaker, but you can be the judge.

Continue readingFull Wave Forcing

MSet

Global Models of Sea Surface Temperature

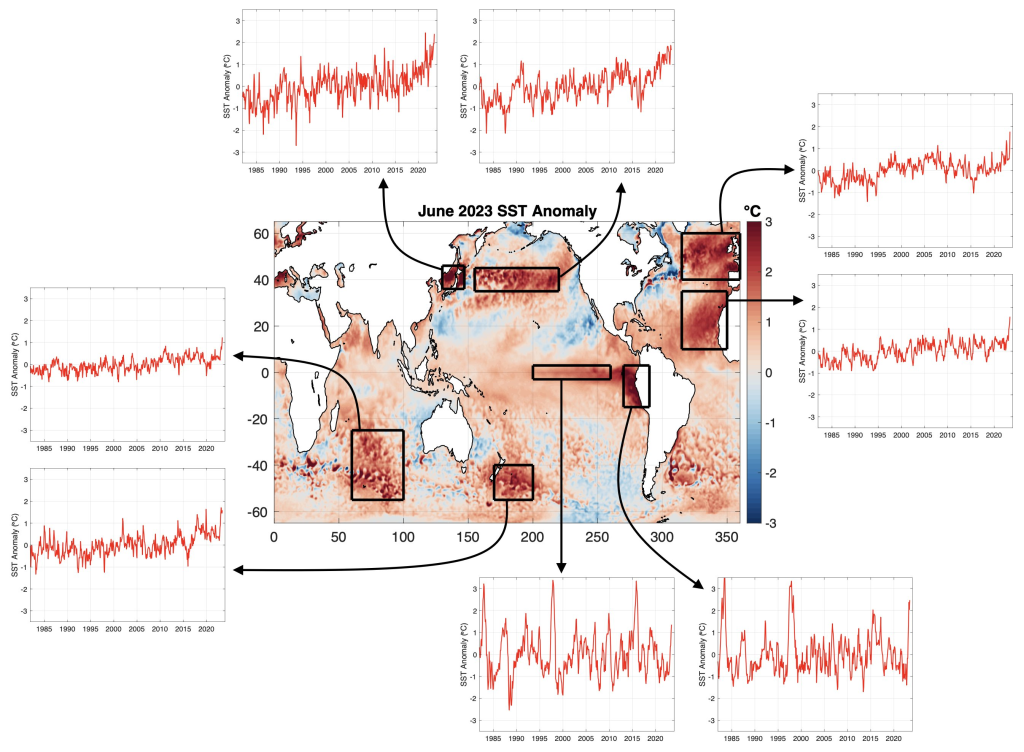

These are a set of 6 EOFs that describe the global SST in terms of a set of orthogonal time-series — essentially non-overlapping, each having a cross-correlation of ~0.0 with the others, like a sine/cosine pair, but in both spatial and temporal dimensions.

Continue readingNo Dice

The comic strip reinforces how poor El Nino forecasts have been in the past. Soon enough machine learning will reproduce the correlation to long-period tidal forcing, substantiated by extensive cross-validation. The interval between 1940 and 1950 was not touched by a hand of god (i.e. the fitting procedure).

The Power of Darwin

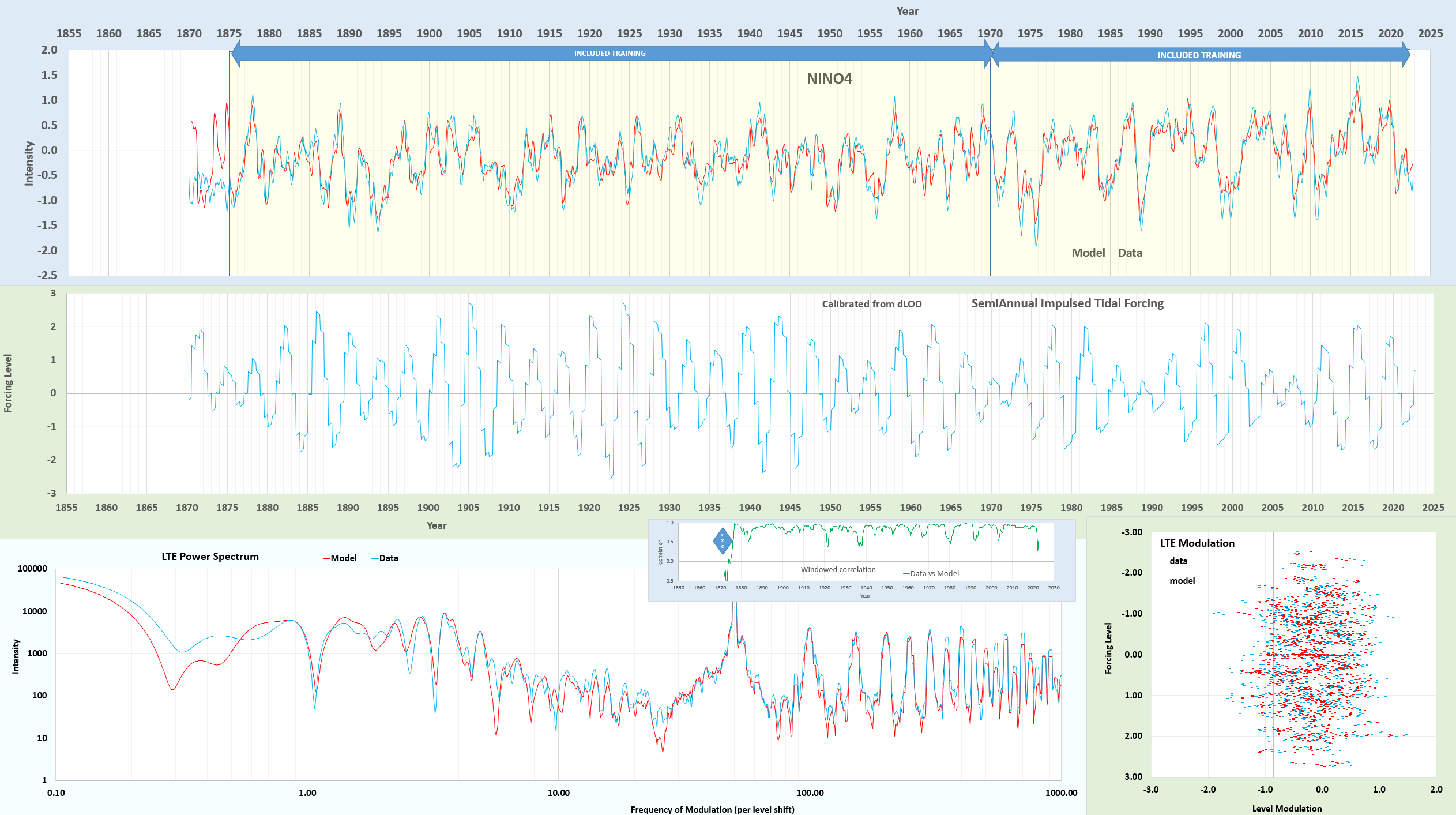

Using the LTE model on the NINO4 ENSO time-series, I excluded the interval between 1870-1875 for cross-validation. The fit below is from scratch on a dLOD-calibrated initial forcing, then allowing the values to vary slightly but the dLOD remains at 0.9999668 of the initial calibration.

The cross-validation doesn’t look good and actually is anti-correlated to a CC = -0.5 over that interval. But the region before 1875 appears suspiciously flat in any case, see the data on the KNMI site — it’s only a certainty that the El Nino peak at 1877-1878 is real and not estimated.

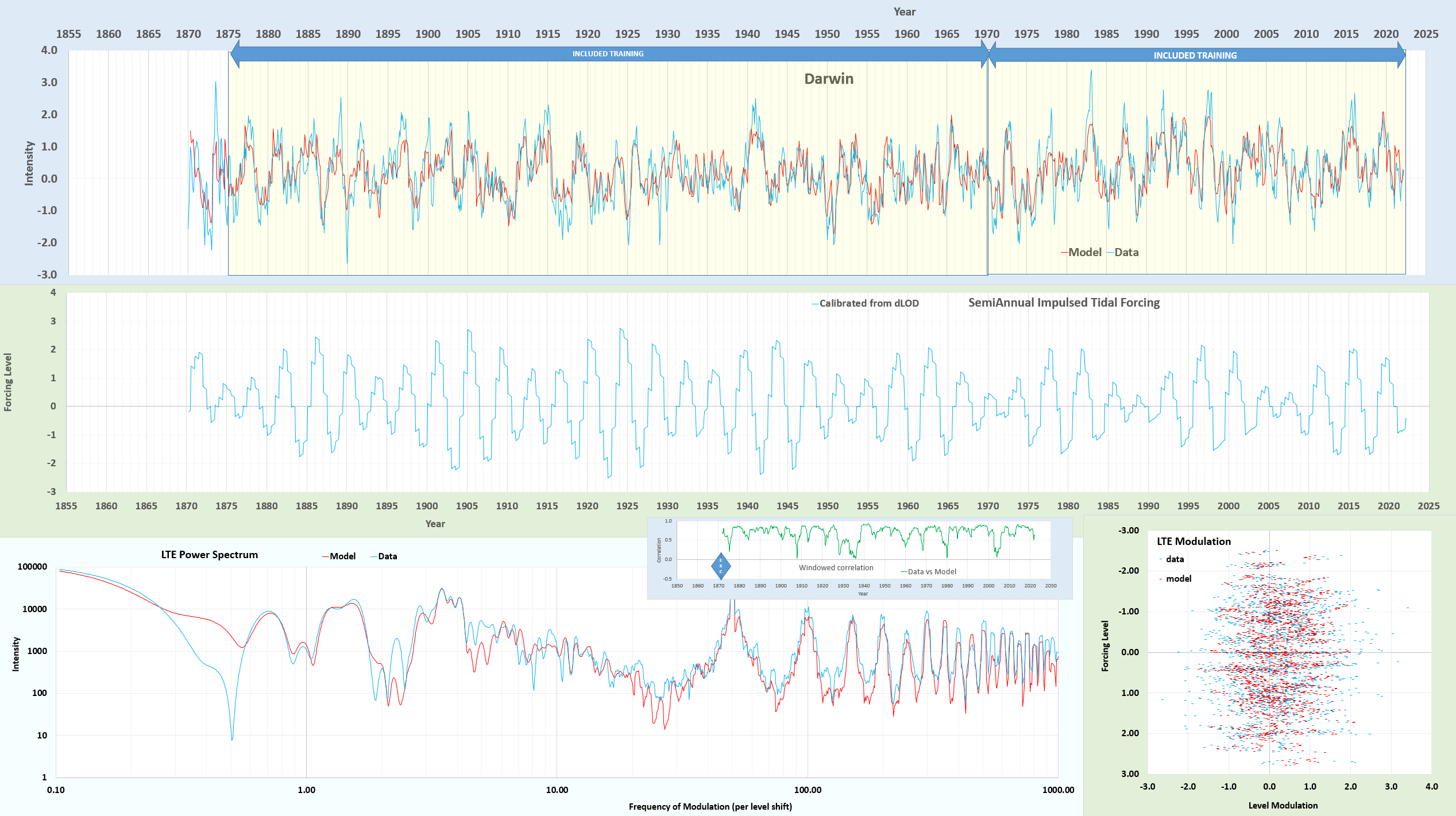

So, the next step is to take the fit to NINO4 and apply it to the Darwin (SOI) time-series, applying the same fitting interval from 1875-present. Immediately, it captures the 3 positive excursions in the interval 1870 to 1875. And then letting the fit proceed to a high CC, the result is shown below:

The resultant fit in the excluded cross-validation region reaches 0.58, thus reversing the anti-correlation and confirming that the NINO4 time-series data prior to 1875 is likely incorrect.

The fit data is at https://gist.github.com/pukpr/c26f1da00337e92dbb47671ca48af2cf?permalink_comment_id=4639783#gistcomment-4639783. The main modifications to tidal factors are in the very long periods — these values start small due to the dLOD calibration (the differential filters out low frequencies) but the 4.42 year amplitude is nearly tripled after the fit, the 8.85 & 9.3 year up by 50%. The 18.6 year is only up 14%, and the 3rd order 6 year and 15.9 year are down 63% and up 45% respectively. All the fortnightly and monthly values are stable. This is perhaps reasonable considering how much the LOD drifts over time at low frequency, and that the calibration is restricted to post-1962.

The Big 10 Climate Indices

The above diagram courtesy of Karnauskus

Overall I’m confident with the status of the published analysis of Laplace’s Tidal Equations in Mathematical Geoenergy, as I can model each of these climate indices with precisely the same (save one ***) tidal forcing, all calibrated by LOD. The following Threads allow interested people to contribute thoughts

- ENSO – https://www.threads.net/@paulpukite/post/CuWS8MFu8Jc

- AMO – https://www.threads.net/@paulpukite/post/Cuh4krjJTLN

- PDO – https://www.threads.net/@paulpukite/post/Cuu0VCypIi5

- QBO – https://www.threads.net/@paulpukite/post/CuiKQ5tsXCQ

- SOI (Darwin & Tahiti) – https://www.threads.net/@paulpukite/post/Cuu2IkBJh55 => MJO

- IOD (East & West) – https://www.threads.net/@paulpukite/post/Cuu9PYvJAG2

- PNA – https://www.threads.net/@paulpukite/post/CuvAVR7JN7R

- AO – https://www.threads.net/@paulpukite/post/CuvEz37JPFV

- SAM – https://www.threads.net/@paulpukite/post/CuvLZ2CMt1X

- NAO – https://www.threads.net/@paulpukite/post/CuvNnwns2la

(*** The odd-one out is QBO, which is a global longitudinally-invariant behavior, which means that only a couple of tidal factors are important.)

Is the utility of this LTE modeling a groundbreaking achievement? => https://www.threads.net/@paulpukite/post/CuvNnwns2la